- Google is updating the Jetpack CameraX library to support capturing Ultra HDR images.

- Ultra HDR is a new image format introduced in Android 14 that enables saving the SDR and HDR version of an image in the same file.

- Currently only camera apps that use the Android Camera2 API can capture Ultra HDR images.

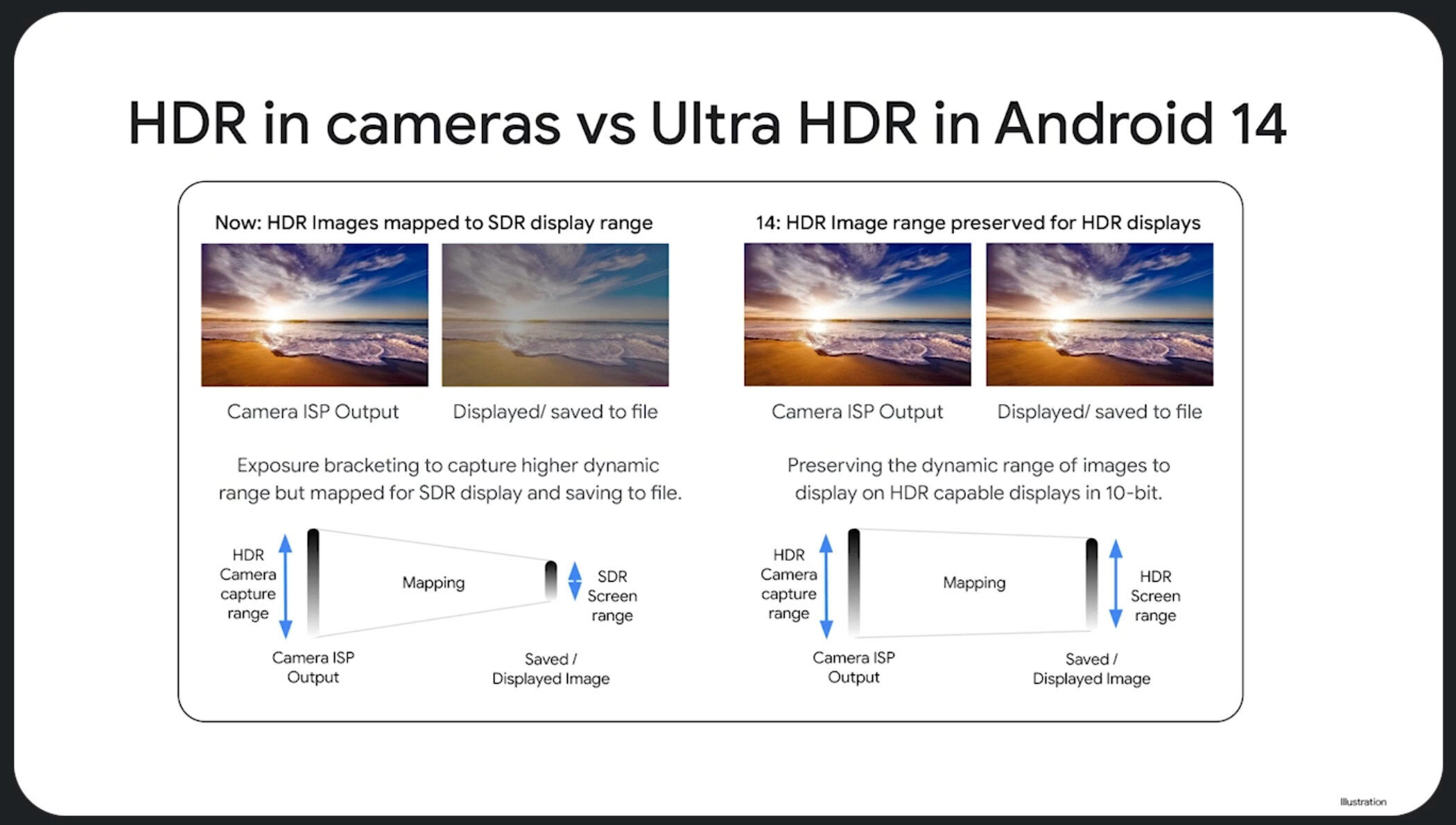

One of the most exciting recent advancements in smartphone photography is true HDR support. The camera HDR processing that you’re probably already familiar with basically uses computational photography and image stacking to achieve an HDR-like effect, but the resulting image is still SDR. Starting with Android 14, though, several Android phones are now capable of capturing true HDR photos using a format called Ultra HDR. However, many third-party apps with built-in camera functionality can’t capture photos in Ultra HDR, but that’s set to change soon.

Ultra HDR, if you aren’t familiar, is a Google-made image format that’s based on the popular JPEG format. Because it’s based on JPEG, Ultra HDR images can be viewed on almost any device, regardless of whether or not they have an HDR display. What makes Ultra HDR images special, though, is that when they’re viewed on a device with an HDR display, the HDR version of the image is shown, offering more vibrant and contrast colors.

This is possible because Ultra HDR images are JPEG files that have an HDR gain map embedded in their metadata, which apps can apply over the base SDR version of the image that’s also contained within the file. Since this HDR gain map has to be created and saved to the JPEG file’s metadata when the image is first created, existing camera apps need to be updated to support this step. Google created an API in Android 14 for camera apps to do just that, but this API is part of Camera2, not CameraX, limiting its adoption.

Camera2 is the API that’s bundled with the Android OS framework and is intended to be used by apps that want to deploy advanced camera functionality. Think full-fledged third-party camera apps with pro-level controls over multiple lenses. On the other hand, CameraX is an API that’s bundled with the Jetpack support library and is more aimed at apps that only need access to the camera for adjacent functionality. Think social media apps that let you quickly snap a picture to share with your friends. It’s up to developers to choose which camera API they use, but because new camera features are developed for Camera2 first, developers who rely on CameraX have to wait for Google to bring that functionality over. Some Camera2 features never make it over to CameraX due to the simplified scope of the latter, but fortunately, Ultra HDR capture support is not one of those features.

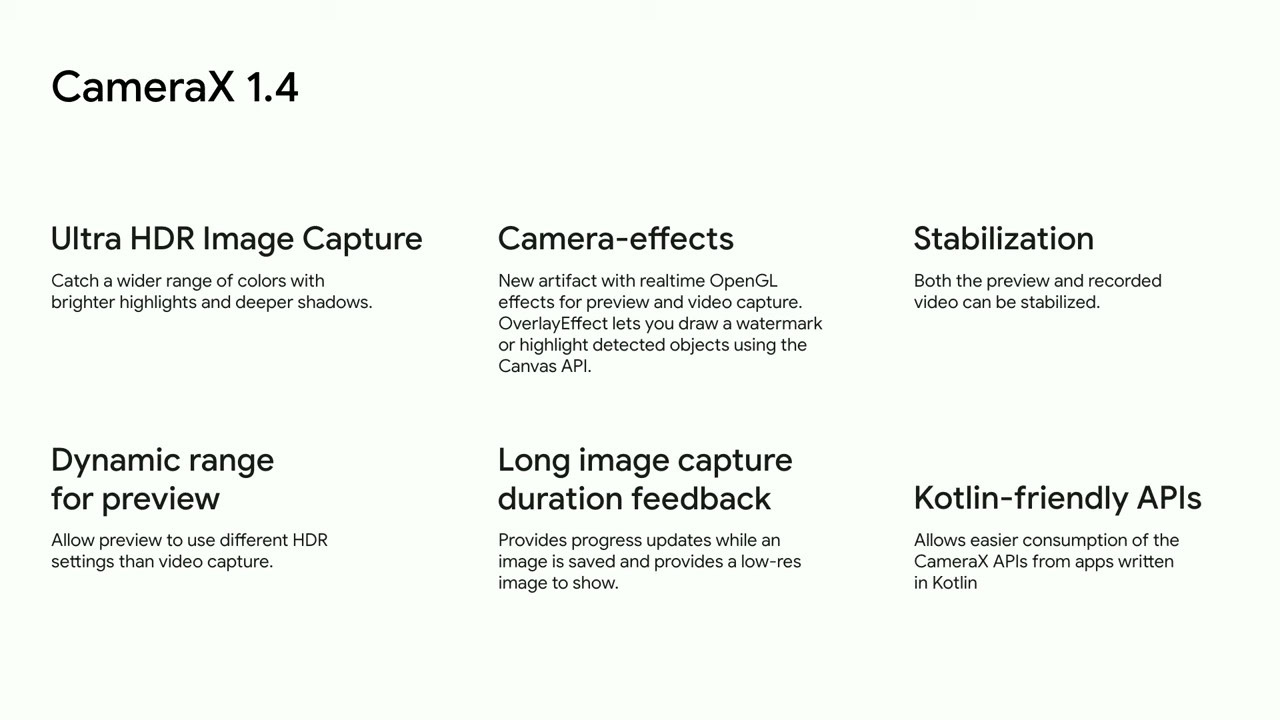

Google announced earlier this year at its I/O 2024 developer conference that they would update their CameraX library to support Ultra HDR image capture. Peeking at the release notes for the CameraX library, we can see that initial support for Ultra HDR capture was added to CameraX with version 1.4.0-alpha05 released in April. Version 1.4.0 of CameraX will introduce new output format APIs to the ImageCapture and ImageCaptureCapabilities classes.

These APIs include a getSupportedOutputFormats method in ImageCaptureCapabilities to query whether the device is capable of capturing Ultra HDR images; this should be possible on any device running Android 14 or above since the encoder library is bundled with that version of the operating system, but I’m not 100% sure. If the output format is set to OUTPUT_FORMAT_JPEG_ULTRA_HDR on a device that supports Ultra HDR image capture, then the CameraX library will capture Ultra HDR images in the “JPEG/R” image format. (The “R” in “JPEG/R” stands for “Recovery map,” which refers to the HDR gainmap that gets embedded in the JPEG file.)

As noted in the API description, these images will seamlessly appear as regular JPEG files on older apps or devices with SDR displays, whereas they’ll appear as HDR on apps and devices that have been fully updated to support the format. Not many apps support Ultra HDR at this moment, with currently only Google Chrome fully supporting it across Android and desktop. Devices that can properly display Ultra HDR images in their full glory include Samsung’s Galaxy S24 series, Google’s Pixel 7 and Pixel 8 series, OnePlus’ 12 and Open, and a couple of others. Some newer Windows PCs with HDR displays, such as my Lenovo Yoga Slim 7X, can also display Ultra HDR images.

The benefits of Ultra HDR images are hard to explain via text, so if you have a device that’s capable of viewing them, I recommend seeing a few for yourself. Here’s a gallery of Ultra HDR photos I took of Qualcomm’s San Diego campus using my OnePlus Open. Thanks to Ultra HDR, these images don’t suffer from the typical “overprocessed” look that current camera HDR processing suffers from. The stock camera app on my OnePlus Open captured these Ultra HDR images, though it only recently gained this capability after an OTA update. Third-party apps that use the Jetpack CameraX library should hopefully start saving images in the Ultra HDR format soon once version 1.4.0 of the library is released.